This content was presented to Nelson\Nygaard Staff at a Lunch and Learn webinar on Thursday, January 20th, 2022, and is available as a recording here and embedded below.

The data for today’s webinar can be found in the GitHub repo folder for this module, download the data and code and try it for yourself!

Today’s Agenda

- Introduction - what do I mean by messy? (5 min)

- Tidying Data (20 min)

- Matching to GTFS identifiers (10 min)

- Addressing Missing Data and Recategorization (25 min)

Introduction - What do I Mean by Messy?

There all sorts of ways data can be ‘messy’ – this webinar aims to introduce you to some of the most common issues we deal with in client data at NN. This is meant to be a high-level look at each of these issues – we can delve deeper into particular examples in future sessions.

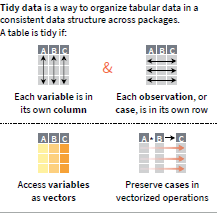

The easiest definition of messy data is data that do not conform to the principles of tidy data. As a reminder of what I mean by tidy data, refer to the conceptual diagram below.

Sometimes, when working with spreadsheet data, you receive data in what we will refer to as a non-tidy format – variable observation information is stored in columns and rows. Tidy data must have all variables as columns and observations as rows – we will go through an example of reshaping from one to the other. For more on the Tidyverse, please refer to the Intro to the Tidyverse Webinar

We will spend the first half of the webinar processing transit ridership data into a single tidy data frame. We will spend the second half of the webinar processing intersection count (multiple modes) data into a tidy data frame.

Beyond questions of tidyness, there are many other ways that data can be insufficient for the needed task. I will talk about a few of them here:

- Data missing necessary identifiers for relevant relationships to other data

- Missing observations implied by data categories

- Cleaning up text strings using regular expressions

- Inconsistent categorizations

- Inconsistent time interval binning

- Geocoding text indicating a spatial location

There are more we won’t get to and I will leave time at the end for enumerating other issues for a future session.

Tidying Data

For better or worse, we will receive Excel spreadsheets, CSVs, and other tabular data of all shapes and sizes from clients. Being able to manipulate data in Excel spreadsheets into a tidy format is essential.

Common issues include:

- Data being spread across multiple files

- Data needing to be reshaped

- Other issues regarding data quality will be dealt with in following sections

library(tidyverse)

library(tidytransit)

library(readxl)

library(janitor)

library(tidytransit)

library(sf)

coord_global = 4326

coord_local = 2227

bus_gtfs = read_gtfs('sample_data/max_gtfs/2020-08-25.zip')

stops = bus_gtfs$stops

routes = bus_gtfs$routes

#Get all file paths into tibble

file_frame = tibble(

file_path = list.files("sample_data/max_ridership/",

full.names = TRUE)

) %>%

#Classify into day type

mutate(days_represented = case_when(

str_detect(file_path,"Sat") ~ "Saturday",

str_detect(file_path,"Sun") ~ "Sunday",

TRUE ~ "All Days"

)) %>%

#Use regular expression to extract year from file path

mutate(year = str_extract(file_path,"20[0-9][0-9]") %>%

as.numeric()) %>%

#Use map function to read multiple files into list-column

mutate(raw_data = map(file_path,~read_excel(.x, sheet = "Stop")))

#Do *most* of cleaning in this step

pre_cleaned_data = file_frame %>%

select(year, days_represented, raw_data) %>%

mutate(clean_data = map(raw_data,function(raw_data){

#For Debugging

#raw_data = file_frame$raw_data[[1]]

clean = raw_data %>%

# get rid of first row, second header

slice(-1) %>%

#flag column used to identify stops

rename(stop_handle = `...1`) %>%

#Pivot all columns except the stop handle (first column)

pivot_longer(cols = 2:last_col(),

names_to = "route_name") %>%

group_by(stop_handle) %>%

#within each stop handle group, can number columns for use of even/odd to sort out boardings/alightings

mutate(col_num = seq_along(stop_handle)+1) %>%

ungroup() %>%

#Route name is only named in some columns, use that and then fill forward

mutate(route_name = case_when(

str_sub(route_name,1,3)=="..." ~ NA_character_,

TRUE ~ route_name

)) %>%

fill(route_name) %>%

#Classify stop activity type into boardings & alightings

mutate(temp_stop_act = case_when(

col_num %% 2 == 0 ~ "ons",

TRUE ~ "offs"

)) %>%

#Get rid of missing cells

filter(!is.na(value)) %>%

#No longer need column number

select(-col_num) %>%

#Pivot on stop activity classification to get separate on and off columns

pivot_wider(names_from = temp_stop_act,

values_from = value) %>%

#Get rid of rows representing totals

filter(!(route_name %in% c("Overall")),

!(stop_handle %in% c("Total"))) %>%

#Coerce ons and offs into numeric (from character created by pivot)

mutate(across(c(ons,offs),as.numeric)) %>%

#Replace any remaining NAs with zeros

mutate(ons = replace_na(ons,0),

offs = replace_na(offs,0)) %>%

#Extract stop code for matching to GTFS stops table

mutate(stop_code = map_chr(stop_handle,function(x){

(str_split(x,"-") %>% unlist())[1]

})) %>%

#Join GTFS stops table

left_join(stops %>%

select(stop_id,stop_code,stop_name,stop_lon,stop_lat)) %>%

#Do some custom correction of names so routes table can be joined (from GTFS)

mutate(route_long_name = case_when(

route_name == "BART" ~ "Bart Express",

route_name == "STOCK Express" ~ "Stockton Express",

route_name == "ACE Express" ~ "Ace Express",

TRUE ~ route_name

)) %>%

#Join routes table

left_join(routes %>% select(route_long_name,route_id)) %>%

#Remove any entries without identifiers

filter(!is.na(stop_id),!is.na(route_id))

})) %>%

#Remove raw data

select(-raw_data) %>%

#Unfurl the clean data frames

unnest(clean_data) %>%

relocate(ons,offs,.after = last_col())

#need to total up weekend data so can be subtracted from all day data to get weekdays

weekend_sum = pre_cleaned_data %>%

filter(days_represented %in% c("Saturday","Sunday")) %>%

group_by(across(-any_of(c("ons","offs","days_represented")))) %>%

summarise(weekend_ons = sum(ons),

weekend_offs = sum(offs))

#Calculate weekday data

weekday_data = pre_cleaned_data %>%

filter(days_represented == "All Days") %>%

left_join(weekend_sum) %>%

mutate(weekday_ons = ons - weekend_ons,

weekday_offs = offs - weekend_offs)

#Bind weekend and new weekday data together, all days data discarded

cleaned_data = pre_cleaned_data %>%

filter(days_represented %in% c("Saturday","Sunday")) %>%

rename(day_cat = days_represented) %>%

bind_rows(weekday_data %>%

rename(day_cat = days_represented) %>%

mutate(day_cat = "Weekday") %>%

select(-contains("weekday"),-contains("weekend")))

Matching to GTFS identifiers

The above was a great example of a dataset that had many different problem with it to be resolved. However, it was missing one issue you will often see with GTFS data – a lack of neccessary identifiers for joining to GTFS tables. Below, we are going to discuss how you might have matched the stops if you were given only a stop name (which can be an inconsistently named string) instead of the stop_code identifier we did have above.

This sort of matching can be done using an approximate string distance, a computer science concept for comparing the similarity of two strings. This is implemented in the {stringdist} package. There are multiple algorithms for carrying out approximate string matching, each of them weighting different factors. It is recommended you check out the documentation to understand each one at a high level and test out a few on your data to see how they perform. A white paper discussing the underlying mathematics is available here.

First we are going to do this matching based solely on names, see how accurate it is, and then we will add one additional piece of information (route) to see if our match can be improved.

library(stringdist)

library(scales)

uq_stop_list = cleaned_data %>%

distinct(stop_handle,stop_code,stop_id,stop_name,route_id,route_long_name)

#Only with stop name, using LCS (longest common substring) algorithm

simp_match_compare = uq_stop_list %>%

distinct(stop_handle,stop_id,stop_code,stop_name) %>%

mutate(matched_stop_info = map(stop_handle, function(sh){

stop_sds = stops %>%

mutate(sdist = stringdist(str_to_upper(stop_name),str_to_upper(sh), method = "lcs"))

matched_stop = stop_sds %>%

arrange(sdist) %>%

select(stop_id,stop_code,stop_name) %>%

rename_with(function(x){paste0("matched_",x)}) %>%

head(1)

return(matched_stop)

})) %>%

unnest(matched_stop_info) %>%

mutate(compare_bool = stop_id == matched_stop_id)

head(simp_match_compare,30)

# A tibble: 30 x 8

stop_handle stop_code stop_id stop_name matched_stop_id

<chr> <chr> <chr> <chr> <chr>

1 25-1 SB SIERRA NS 25 25 Modesto HS - ~ 451

2 28-7TH NB H FS 28 28 7th St & H St 28

3 29-7TH NB K NS 29 29 7th St & K St 29

4 195-7TH SB 99 NS 195 196 7th St betwee~ 452

5 193-7TH SB at BJ 193 194 7th St at BJ ~ 28

6 191-7TH SB CROW FS 191 192 7th St & Crow~ 498

7 194-7TH SB PECO FS 194 195 7th St & Peco~ 498

8 767-9 NB TUCLD MB 767 768 9th St & Cold~ 171

9 498-9TH NB CAMP NS 498 499 9th St & Camp~ 170

10 209-9TH NB D FS 209 210 9th St & D St 171

# ... with 20 more rows, and 3 more variables:

# matched_stop_code <chr>, matched_stop_name <chr>,

# compare_bool <lgl>simp_match_rate = mean(simp_match_compare$compare_bool)

print(percent(simp_match_rate, accuracy = 0.1))

[1] "24.1%"#24.1% match... not very good!

That did not turn out very well… let’s try constraining the search for stops to only stops served by the given route.

uq_route_stops = bus_gtfs$stop_times %>%

distinct(trip_id,stop_id) %>%

left_join(bus_gtfs$trips %>% distinct(trip_id,route_id)) %>%

distinct(route_id,stop_id)

route_match_compare = uq_stop_list %>%

mutate(matched_stop_info = map2(stop_handle, route_id, function(sh, rid){

stop_sds = stops %>%

right_join(uq_route_stops %>% filter(route_id == rid)) %>%

mutate(sdist = stringdist(str_to_upper(stop_name),str_to_upper(sh), method = "lcs"))

matched_stop = stop_sds %>%

arrange(sdist) %>%

select(stop_id,stop_code,stop_name) %>%

rename_with(function(x){paste0("matched_",x)}) %>%

head(1)

return(matched_stop)

})) %>%

unnest(matched_stop_info) %>%

mutate(compare_bool = stop_id == matched_stop_id)

head(route_match_compare,30)

# A tibble: 30 x 10

stop_handle stop_code stop_id stop_name route_long_name route_id

<chr> <chr> <chr> <chr> <chr> <chr>

1 25-1 SB SIERR~ 25 25 Modesto ~ Route 21 1

2 25-1 SB SIERR~ 25 25 Modesto ~ Route 36 15

3 28-7TH NB H FS 28 28 7th St &~ Route 21 1

4 28-7TH NB H FS 28 28 7th St &~ Route 36 15

5 29-7TH NB K NS 29 29 7th St &~ Route 21 1

6 29-7TH NB K NS 29 29 7th St &~ Route 33 13

7 29-7TH NB K NS 29 29 7th St &~ Route 36 15

8 195-7TH SB 99~ 195 196 7th St b~ Route 29 9

9 193-7TH SB at~ 193 194 7th St a~ Route 29 9

10 191-7TH SB CR~ 191 192 7th St &~ Route 29 9

# ... with 20 more rows, and 4 more variables: matched_stop_id <chr>,

# matched_stop_code <chr>, matched_stop_name <chr>,

# compare_bool <lgl>route_match_rate = mean(route_match_compare$compare_bool)

print(percent(route_match_rate, accuracy = 0.1))

[1] "44.2%"#44% match... still not that great!

This matching is bad enough that I would recommend finishing the match manually – the algorithm still saved you matching 44% of stops! If you want, you can even bring the nearest strings into a separate sub-group so when you are manually matching, you do not have to compare as many. As you can see, messy data can be quite a budget issue!

Further Missing GTFS Info

Stop names are not the only thing that can be missing – we did not analyze trip level data for this project, but trip identifiers can also often be missing. You then may have to design an algorithm to match observations to the relevant trip_id based on any time of day information, as well as the stop (and any order information) that is available. I will not have time to go over this example, so I will point to a script where I did this – trip IDs had to be matched in processing APC data for Austin. If there is interest, we can go over this script in a future workshop. However, this matching algorithm is an educated guess – it will not be accurate 100% of the time, and so will result in erroneous assignments to some degree. Assignments should be checked to minimize erroneous assignment.

The best solution to missing data issues is to ask the client if they can fill in the missing information. Unfortunately, sometimes they cannot, and as long as they are willing to budget in the time to clean their data, it usually can be cleaned, even if that involves some manual matching time.

Addressing Missing Data and Recategorization

For this section we are going to use a different dataset – a set of vehicle volumes collected for different intersections in Honolulu. Thank you to the Honolulu Vision Zero project team for allowing us to use this data for demonstration purposes.

This dataset allows us to illustrate a number of issues that can be encountered in data. Key concepts are bulleted below, and comments are provided throughout the code.

- Missing observations implied by data categories. Here we will want to make use of the

expand_grid()function, which will ‘expand’ the data frame to include all unique combinations available of the variables in the data frame provided to the function. It is a very handy to tool to identify missing observations that may be implied by the structure of the data, but are not explicitly identified as such in the raw data. - Cleaning up text strings using regular expressions. We did this in the earlier section as well, but we put some more advanced regular expressions to work below. We talked about this in a previous webinar worth referring back to. A tool I made use of when writing this script was regex101.com, which provides many tools for setting up and troubleshooting a regular expression.

- Inconsistent categorizations. We see throughout the data below that turning categories and vehicle type categories are referred to inconsistently. Using

case_when()(or alternatively,left_join()ing a table) we can recategorize the data into simpler and more consistent categories. Keep in mind what you ultimately want to do with the data to determine how to re-categorize. Simpler (e.g., fewer) categories are always easier, but preserve the variation you need for the analysis you are ultimately conducting. - Inconsistent time interval binning. Data observed in time intervals can sometimes have inconsistent time bins – the traffic volumes below are observed at both 5 minute and 15 minute intervals. We will roll up observations to 15 minute intervals to acheive a consistent data resolution.

- Geocoding text indicating a spatial location. We have talked about how to use the

{googleway}package to geocode in several previous webinars. It comes in handy here to geocode the intersection names that we extract from the filenames. This helps both for making the final data more useful, as well as imputing missing data, assuming that there is a spatial relationship between traffic volumes.

library(lubridate)

library(parsnip)

library(naniar)

library(hms)

library(scales)

library(googleway)

raw_tibble = tibble(

#Grabbing both full path for reading in, and file name for parsing information from using regular expressions

file_name = list.files("sample_data/honolulu"),

file_path = list.files("sample_data/honolulu", full.names=TRUE)

) %>%

#Reading in each CSV

mutate(raw_data = map(file_path,~read_csv(.x))) %>%

#Extraction of intersection name, and then to/from timestamp.

mutate(intersection_name = str_extract(file_name,"(?<=Intersection_-_).+?(?=[0-9]{4}?)") %>%

str_replace_all("_"," ") %>%

str_trim(),

from_timestamp = str_extract(file_name,"(?<=_)(\\d{4}.+)(?=_UTC_-_)") %>%

ymd_hms(),

to_timestamp = str_extract(file_name,"(?<=_-_)(\\d{4}.+)(?=_UTC_)") %>%

ymd_hms()) %>%

#select only columns needed

select(raw_data,intersection_name,from_timestamp,to_timestamp) %>%

#add identifier to intersection

arrange(intersection_name) %>%

mutate(intersection_id = seq_along(intersection_name))

#Unnest the raw data observations to get a look at them all

unfurled_tibble = raw_tibble %>%

unnest(raw_data) %>%

mutate(measure_timestamp = ymd_hms(time)) %>%

select(-time)

#Take a look at the data

head(unfurled_tibble,100)

# A tibble: 100 x 8

turn count road_user_type intersection_name from_timestamp

<chr> <dbl> <chr> <chr> <dttm>

1 EBL 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

2 EBR 26 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

3 EBT 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

4 EBU 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

5 NBL 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

6 NBR 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

7 NBT 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

8 NBU 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

9 SBL 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

10 SBR 0 All Vehicles Aalapapa Drive and ~ 2017-09-09 21:00:00

# ... with 90 more rows, and 3 more variables: to_timestamp <dttm>,

# intersection_id <int>, measure_timestamp <dttm>#Some of the data is in 5 minute bins, some is in 15 minute bins. Need to roll up to 15 minute bins.

#Also getting rid of turning movement, data is too inconsistent

#Back to nested for some grid expansion to check for missing observations from any sub-categories and collapse bins

nested_tibble = unfurled_tibble %>%

select(intersection_id,intersection_name,from_timestamp,to_timestamp,

measure_timestamp,turn,road_user_type,count) %>%

#Nesting by intersection, i.e. the columns not included in the nest command below

nest(raw_obs = measure_timestamp:count) %>%

mutate(expanded_obs = pmap(.l = list(raw_obs),

.f = function(raw_obs){

#For initial writing and debugging

#raw_obs = nested_tibble$raw_obs[[1]]

collapsed_obs = raw_obs %>%

#The `cut` function has methods for POSIXct data such that you can classify into bins like this

mutate(timestamp_bin = cut(measure_timestamp,breaks="15 mins")) %>%

#Simplify road user type

mutate(simp_road_user_type = case_when(

road_user_type %in% c("All Vehicles","Heavy Vehicles",

"Articulated Trucks","Buses","Cars",

"Light Goods Vehicles","Motorcycles","Single-Unit Trucks",

"Cars & Light Goods") ~ "Motorized Vehicles",

road_user_type == "Peds" ~ "Pedestrians",

road_user_type == "Bikes" ~ "Bicycles"

)) %>%

#Roll up

group_by(simp_road_user_type,timestamp_bin) %>%

summarise(count = sum(count,na.rm = TRUE)) %>%

ungroup() %>%

#Localize timezone (from UTC to Honolulu time)

mutate(timestamp_bin = with_tz(timestamp_bin,tzone = "America/Honolulu"))

#Enumerate unique time bins

uq_time_bins = as.POSIXct(unique(collapsed_obs$timestamp_bin))

#Expand grid for unique combinations of road user type and time bin, join above data frame to expanded grid

expanded_grid = collapsed_obs %>%

distinct(simp_road_user_type) %>%

expand_grid(timestamp_bin = uq_time_bins) %>%

left_join(collapsed_obs %>%

mutate(timestamp_bin = as.POSIXct(timestamp_bin)))

return(expanded_grid)

}))

#Unnest data again, remove raw observations

expanded_tibble = nested_tibble %>%

select(-raw_obs) %>%

unnest(expanded_obs)

#How much data is missing?

mean(is.na(expanded_tibble$count)) %>% percent(accuracy=0.1) #0.2% of rows are missing

[1] "0.2%"Now, we will geocode the intersection names so you we can use the coordinates of the intersection to help impute missing observations.

#Using an API Key set up for NN R training -- please use your own API key below

set_key('<YOUR API KEY HERE>')

coord_local = 3759 # Pulled for Honolulu from https://nelsonnygaard.shinyapps.io/coord-system-reference/

uq_intersections = expanded_tibble %>%

distinct(intersection_id,intersection_name) %>%

#Fetch geocode result for each unique intersection name

mutate(geocode_result = map(intersection_name,function(int_string){

#Append with Honolulu, HI to help Google find the intersection

geo_string = paste0(int_string,", Honolulu, HI")

geo_result = google_geocode(geo_string,

# Provide geocoding bounds to help narrow the search

bounds = list(c(21.15836519019052, -158.3157853827537),

c(21.816161973528708, -157.72537889593727)))

#Make sure geocode went OK, missing if not

if(geo_result$status == "OK"){

geo_loc = geo_result$results$geometry$location

}else{

geo_loc = tibble(lat = NA, lng = NA)

}

return(geo_loc)

})) %>%

unnest(geocode_result)

intersection_geom = uq_intersections %>%

filter(round(lat)==21) %>% # Remove bad geocodes, there was still 1 in there

#Some locations got multiple results, so after removing the bad one, taking average

group_by(intersection_id,intersection_name) %>%

summarise(lat = mean(lat,na.rm=TRUE),lng = mean(lng,na.rm=TRUE)) %>%

st_as_sf(coords = c("lng","lat"), crs=4326) %>%

#Transforming to planar coordinates for imputation exercise

st_transform(coord_local)

intersection_coords = bind_cols(

intersection_geom %>% st_drop_geometry(),

intersection_geom %>% st_coordinates() %>%

as_tibble()

)

Now we can carry out the missing data imputation. Often, I use the {mice} package for this, which does much of the work for you in imputing missing values. However, to better show you what is going on in the process of imputation, I am setting up my own Random Forest model below using the {parsnip} package. I used a random forest model because it is extremely flexible, but there are many others that could have been used (e.g., linear regression).

imp_tibble = expanded_tibble %>%

#Join coordinates

left_join(intersection_coords) %>%

select(-from_timestamp,-to_timestamp) %>%

#Calculate some additional features for the model

mutate(date = as.Date(timestamp_bin),

weekday = weekdays(date), #Weekday

hour_num = hour(timestamp_bin) + minute(timestamp_bin)/60) %>% #Hour of day as decimal

#Coerce my character data into factors (categorical data)

mutate(intersection_name = factor(intersection_name),

simp_road_user_type = factor(simp_road_user_type),

weekday = factor(weekday)) %>%

#Create a boolean column to let me know which data have to be imputed (and ultimately were in final dataset)

mutate(imputed = is.na(count))

#Nest data again for imputation separately by mode

imp_tibble_nested = imp_tibble %>%

select(simp_road_user_type, everything()) %>%

#Nest by mode

nest(mode_data = 2:last_col()) %>%

mutate(completed_data = map(mode_data,function(mode_data){

#For debugging

#mode_data = imp_tibble_nested$mode_data[[1]]

#Your model training set is complete observations

training_set = mode_data %>%

filter(imputed == FALSE)

#The set you will be imputing has missing observations

imp_set = mode_data %>%

filter(imputed == TRUE)

if(nrow(imp_set)>0){

#Train a model. Can interactively check for model performance when writing by printing object

trained_model = rand_forest(mode = "regression",mtry = 3, trees = 1000) %>%

fit(count ~ weekday + hour_num + X + Y,

data = training_set)

#Predict missing observations using trained model

imputed_set = imp_set %>%

mutate(count = predict(trained_model, new_data = .)$`.pred`)

#combine datasets back together

complete_set = bind_rows(training_set,imputed_set)

}else{

complete_set = training_set

}

return(complete_set)

}))

#Behold your completed dataset!

complete_tibble = imp_tibble_nested %>%

select(-mode_data) %>%

unnest(completed_data)

head(complete_tibble, 100)

# A tibble: 100 x 11

simp_road_user_type intersection_id intersection_name

<fct> <int> <fct>

1 Bicycles 1 Aalapapa Drive and Mokulua Dri~

2 Bicycles 1 Aalapapa Drive and Mokulua Dri~

3 Bicycles 1 Aalapapa Drive and Mokulua Dri~

4 Bicycles 1 Aalapapa Drive and Mokulua Dri~

5 Bicycles 1 Aalapapa Drive and Mokulua Dri~

6 Bicycles 1 Aalapapa Drive and Mokulua Dri~

7 Bicycles 1 Aalapapa Drive and Mokulua Dri~

8 Bicycles 1 Aalapapa Drive and Mokulua Dri~

9 Bicycles 1 Aalapapa Drive and Mokulua Dri~

10 Bicycles 1 Aalapapa Drive and Mokulua Dri~

# ... with 90 more rows, and 8 more variables: timestamp_bin <dttm>,

# count <dbl>, X <dbl>, Y <dbl>, date <date>, weekday <fct>,

# hour_num <dbl>, imputed <lgl>Other ‘Messy’ Data Topics for a Future Session

- Measurement errors and/or statistical outliers

- Assigning GTFS identifiers based on location (e.g., if you have AVL observations without identifiers)

- Assigning GTFS identifiers to high-resolution AVL (rolling up big data into something more useful)

- Cleaning up geographic data (negatives assigned or not)

- … others folks can think of that we did not cover here?

Further resources

Blog Posts

- https://rfortherestofus.com/2019/12/how-to-clean-messy-data-in-r/

- https://rpubs.com/odenipinedo/cleaning-data-in-R

- https://towardsdatascience.com/data-cleaning-in-r-made-simple-1b77303b0b17

DataCamp Courses

- Cleaning Data in R

- Cleaning Data in Python

- Dealing with Missing Data in R

- Handling Missing Data with Imputations in R

- Dealing with Missing Data in Python